...

Scope: This procedure provides instructions for running automated import, export, cleanup, reporting and various related jobs using scripts found in LS Tools web site and other tools created by the Library Systems Office and LTS Batch Processing staff. For issues surrounding the scheduling of large batch loads, please consult Bulk Loading: Scheduling (#46d). Unless otherwise noted, the items listed below are descriptions of scripts and tools found on LS Tools. Jobs may include scripts known as "mod jobs" run from a staff member's individual LS Tools folder, as opposed to scripts that are accessible directly from the LS Tools web interface. Non-LS Tools jobs are most commonly referred to as "manual" jobs. For information on staff batch job assignments, please refer to the Bulk Loading: List of Jobs (#46c). using the data import module in FOLIO.

Unit: Batch Processing and Metadata Management

Date last updated: 07/09/2019

Date of next review: July 2021

Note: Access to LS Tools is limited to trained technical services staff, divided into several levels, depending upon the degree of complexity and the impact of the tools accessible at each level.

...

August 2022

- Upload file

- Select job profile

- Click run

- Reload page after 30 seconds or so

- Read the data import log

How to read the log

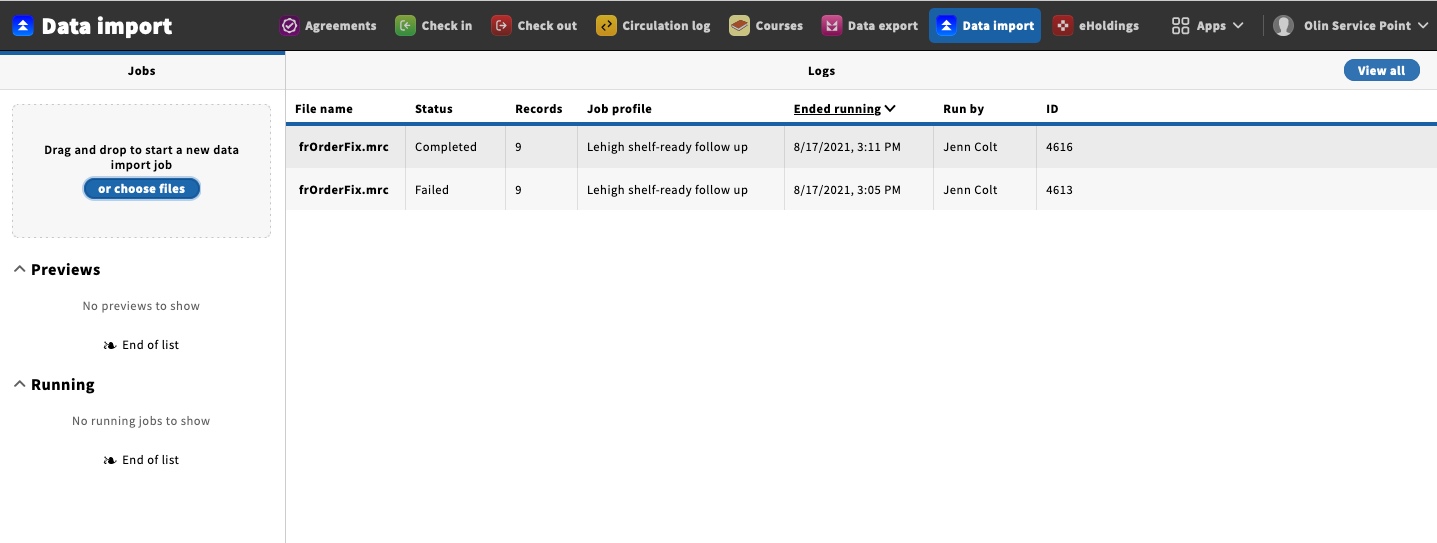

When you go to the data import page you will see the 25 most recent data import logs. However, these logs filter out the OCLC single-record imports. So in the case of this screenshot, 23 of the most recent logs were from OCLC imports and only two were regular data import jobs. If the screen is blank it means that all of the 50 most recent jobs were from single record imports. Each single record import generates its own log entry. To see all of the data import logs including single record imports, click the View all button.

Once you are on the View All logs page, you will have access to a number of filters. Including filtering by job profile, user name, and date. Note that the filter drop down lists are made up of values from the data import logs currently being displayed. If you need to search on other values than those displayed, you can either scroll further back in the log, or if you are not looking for single record logs, filter out the single record logs by clicking "No" under the single record filter.

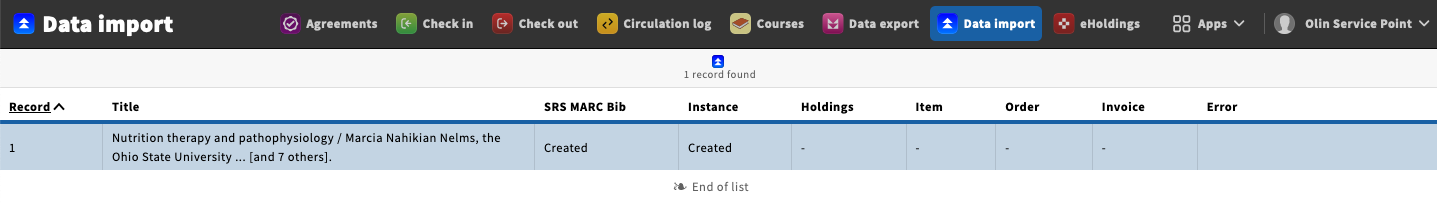

To view the record by record details of a data import job. Click on the file name of the job you want to look at. Unfortunately, all the single record jobs and Lehigh jobs have "No file name" in the file name column. To identify a single record job that you want to examine, you'll need to take the user name and date/time into account. After you click on the file name, you'll see the details for the records loaded in that job. In this job, a single record was loaded. It created a new MARC bib and a new inventory instance, no actions were taken on holdings, items, orders or invoices:

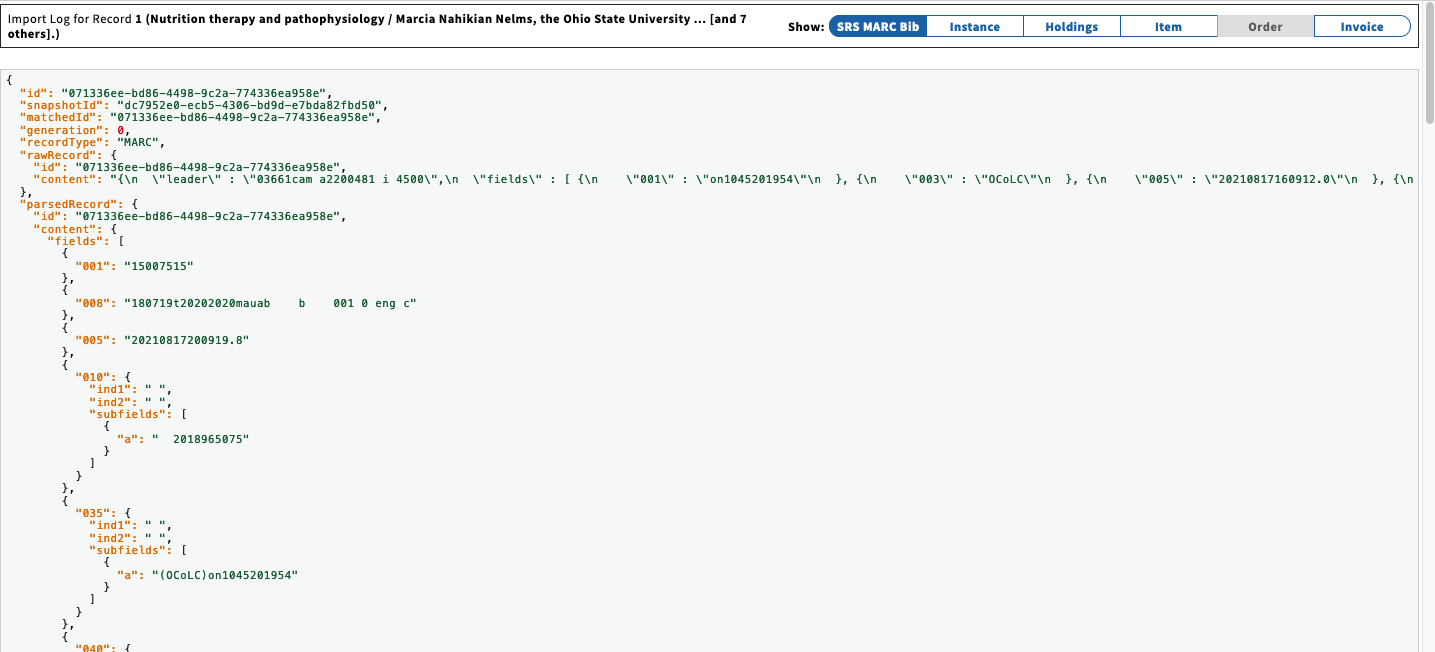

For even more detailed (and technical) information about the record, click on the title of the record. This will bring you a screen that displays the JSON for the MARC bib, the inventory instance, and any other record types associated with the record that was loaded. Most useful here is the 001 toward the top of the JSON document, which is also the HRID for the instance. You can take the number from the 001 in the JSON and search for it in the HRID search in inventory, and this will allow use to examine the actual record that was loaded.

...

*NOTE: Some scripts are placed at this level while they are in the later development stages and then can be moved after development is completed to a lower level so that the staff assigned to run it may access it once it is ready for production. Some scripts that are one-time, large scale projects are placed here for use by expert-level users, and removed once the project is complete.

...

I. “General Update” Section of LS Tools

A. Move LTS or Law EDI File to Voyager Folder

B. Check for/Remove Carriage

C. MARC Modification

D. ENACT Bulk Import

E. Prepare a Vendor e-Book Load

F. Prepare a Vendor Print Load

II. “Voyager Harvest” Section of LS Tools

A. MARC File Examination

B. View Harvest Output

C. Develop a Harvest query to identify Voyager Record

D. Extract MARC Records from Voyager

III. “CLAMSS subsystem”

I. “Voyager Update Utilities” Section of LS Tools

A. Prepare a Miscellaneous MARC Input File

B. Execute a Privileged Production Procedure

C. Queue BulkImport

D. Run a Voyager Utility Program

II. “Voyager Reporting Utilities” Section of LS Tools

A. Execute a Report or Extract

B. Match BibIDs from external list

C. Other reports/extracts, which are NOT in LS Tools

Batch Processing/Heads/Expert Group

I. “ALL LS Tools Production” Section of LS Tools

A. Business Analysis Web Displays

B. Cron Jobs for Interaction with OCLC

C. Execute a Google Utility

D. Cron Jobs with General Access

E. Cron Jobs with Privileged Access

F. Execute an Administrative Production Procedure

I. “Administer LS Tools Itself” Section of LS Tools

A. Report/Clean Up Voyager MARC Record Errors

...